Simply Exploration of Unity Local Text-to-Speech Conversation + Large Language Model

Written before

My direction was cut by the higher-ups, so I resigned this week. Perhaps the problem is the imbalance of power and responsibility. When it comes to promoting progress in the middle office, members are always occupied with project tasks. Over time, I also started to research things that I am interested in and may be useful for the project. Overall, I have gained a lot: I learned about ComfyUI’s AIGC workflow from a colleague sitting next to me who is good at AI full-stack from training to deployment. I plan to study this as a master’s thesis topic in the next six months (but he was laid off earlier than me, which is a bit black humor); and I will be more cautious about those who offer olive branches with team leaders/producers positions in the future (although this time I also want to use the internship opportunity to experience various positions).

Introduction

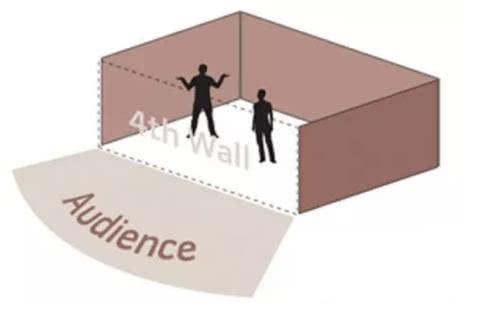

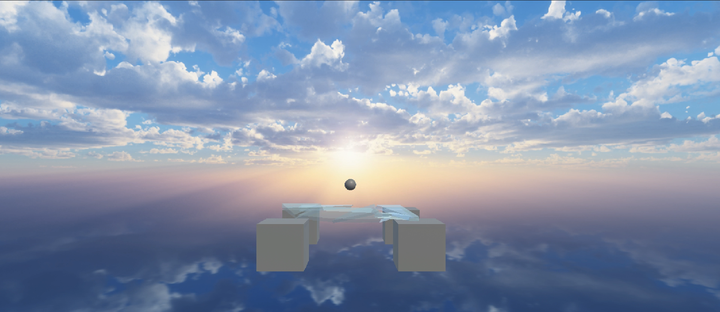

This demo was inspired by Whisper from the Star and exploring intelligent NPCs. I personally think it’s unrealistic for existing games to be entirely AI-driven, but giving AI some wiggle room within the existing framework is acceptable. For example, during a RPG’s beginner’s guide, the village chief commissions you to clear the slimes in the village. You can choose to talk to the chief: “Codger, I’m new here and unequipped, so…” Depending on your sincerity and eagerness, the chief might offer you some herbs and the 「best sword in the village」 to replace your 「beginner’s wooden sword」. You can also extort more from him each time you accept or complete a commission. However, when you reach a neighboring village, the chief might directly insult you: “I’ve heard of you, you greedy guy…” (The setting suggests that the two villages frequently trade resources, and the neighboring chief is younger and more outspoken). I personally don’t like the AI used in Whisper from the Star; it feels like a situational role-playing chat, forced into a linear node at specific points. I’d probably prefer to pre-set character personalities and relationships, then let the AI increase or decrease friendliness based on player interaction, resulting in different dialogues and actions that help or hinder the player (giving items, joining in battle/secretly saying bad things, or even dueling with the player). This process is quite similar with the process I write story: set the stage, place the characters, and the story unfolds naturally. Perhaps “a person’s character is their destiny,” Emm… Honkai Impact 3rd is still chasing me.

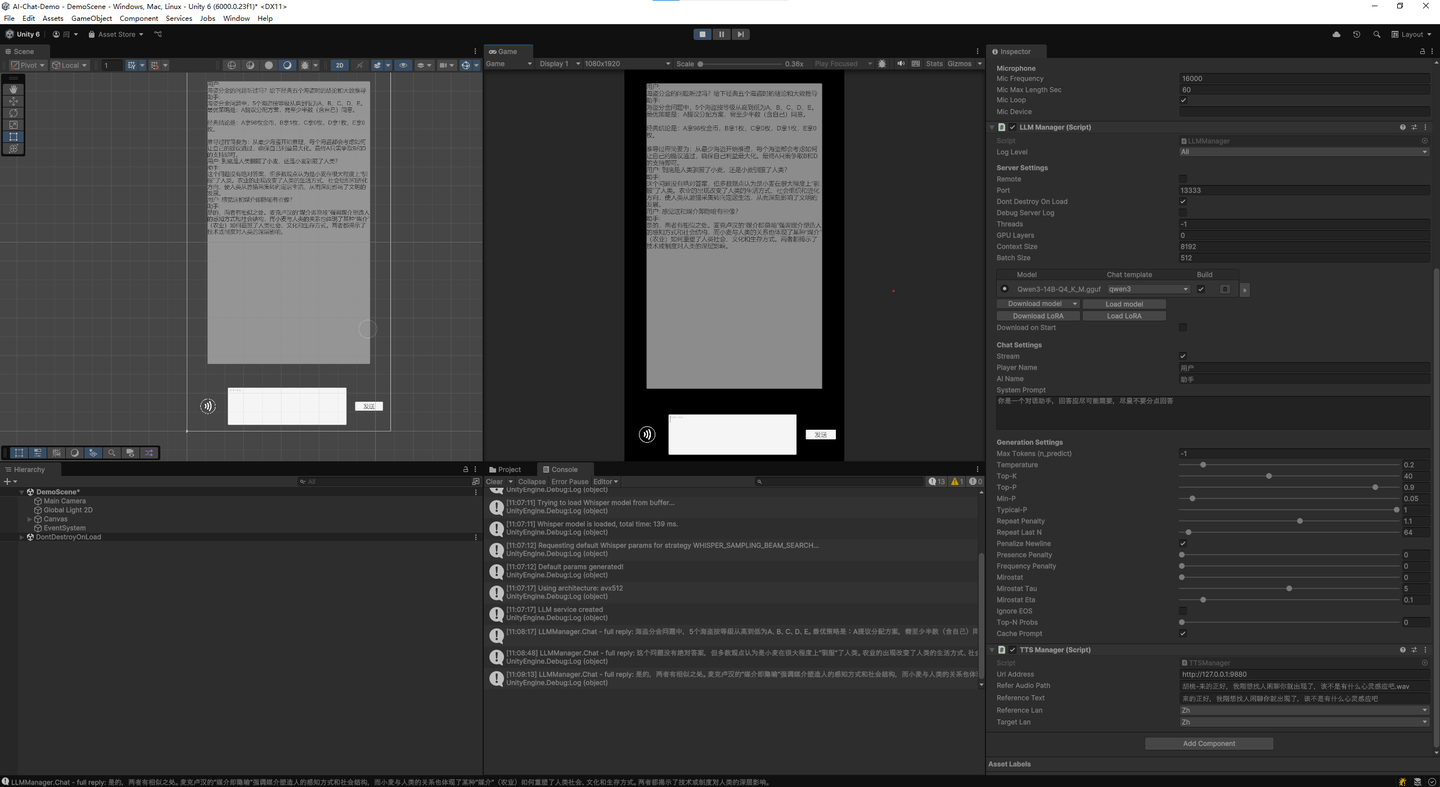

The project currently integrates local speech-to-text conversion and large language model dialogue. Speech-to-text is implemented by whisper.unity, large language model dialogue is implemented by LLM for Unity, and text-to-speech is implemented by GPT-SoVITS. Only text-to-speech requires enabling local port mapping outside of Unity. There are some Unity text-to-speech plugins, but after testing, they felt too mechanical, even inferior to the electronic sounds. Therefore, this sound cloning solution was ultimately chosen, using audio less than ten seconds for cloning.

The reason for staying at the current dialogue interface is that the latency of the local solution is not acceptable (for the effect, the language model must be at least 4B, which can barely achieve real-time streaming text output. However, to convert it to speech, you have to wait until the text is generated, and the time spent on generating speech is comparable to that of generating a complete text answer. In this way, even if we convert it to speech after each complete sentence is generated, it will take six or seven seconds from the player input to receiving speech feedback. One solution is to abandon speech directly or learn from Hollow Knight, recognize the emotions of the conversation and answer in Zerg language, but I may prefer to explore solution of calling the online API, but this requires a lot of time and energy). At present, the autumn recruitment and thesis occupy most of my energy (I deliever programmer, designer and artist jobs for the autumn recruitment, plus I chose a field I am not familiar with for the thesis. I sometimes feel that my strange level has reached a new level). I can only say that there is a long way to go and I will continue it next time.

There’s actually a lot more to do next on the ToDoList: build a village scene, provide prompts and reference audio for interactions with NPCs like the village chief, villagers, and guards; develop an API solution for voice-text conversion and large language model dialogue, in addition to local methods; set the return format for large language model dialogue, pass trigger events to the game logic, and pass the current emotion to the text-to-speech module…

Pipeline

The project divides these three functions into three managers: STTManager, LLMManager, and TTSManager. The models used by the STTManager are located in (Assets/StreamingAssets/Whisper). Initially, only the Tiny model is available, with average recognition capabilities. If you require a larger model, you can download it, place it in the specified location, and name it correctly (ggml-

Conclusion

My biggest takeaway from this project is that AI NPCs are much more complex than I’d imagined. No wonder that AI applications are mainly limited to role-playing dialogue. First, building the logic for player-NPC and NPC-NPC interaction is crucial (accurate simulations like the Stanford AI Town would be too consuming; perhaps dicing a character’s morning, afternoon, and evening schedules, and then exchanging information with characters in the same time and space, would suffice). Second, preventing players from jumping out and disrupting the game (think classic catgirl injections, telling NPCs they’re just NPCs in the game…). Due to limited resources and abilities, I’ll stop here for now, hoping to return to further refine it later.